What’s Machine Studying Operations Mlops?

Machine learning ethics is changing into a area of study and notably, turning into built-in inside machine learning engineering teams. Reinforcement learning is an area of machine studying involved with how software program what is machine learning operations agents should take actions in an environment in order to maximise some notion of cumulative reward. In reinforcement studying, the setting is typically represented as a Markov determination course of (MDP).

The Last Word Information To Mlops: Greatest Practices And Scalable Tools For 2025

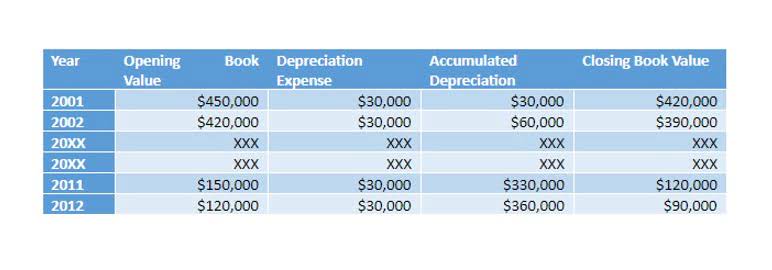

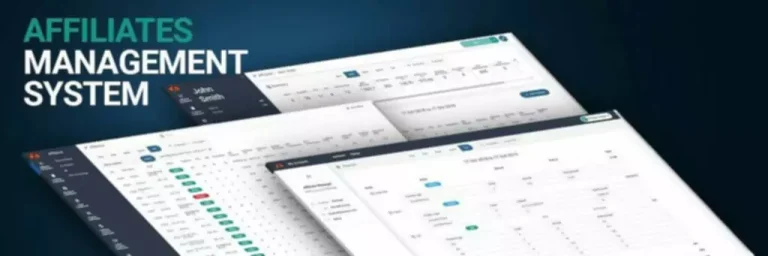

Information versioning and information provenance are important components of building reproducible ML systems. The kinds of problems you are fixing will decide which of these resources are most relevant to your workflows. One a part of AIOps is IT operations analytics, or ITOA, which examines the info AIOps generates to figure out how to improve IT practices. IBM® Granite™ is our household of open, performant and trusted AI models, tailor-made for enterprise and optimized to scale your AI applications. Furthermore, LLMs supply potential benefits to MLOps practices, including the automation of documentation, help in code critiques and improvements in information preprocessing. These contributions could considerably enhance the efficiency and effectiveness of MLOps workflows.

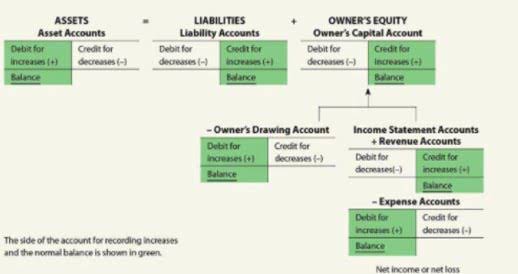

Even once you’ve constructed a functioning infrastructure, simply to maintain the infrastructure and keep it up-to-date with the newest know-how requires lifecycle management and a devoted staff. MLOps can help your group automate repetitive tasks, improve the reproducibility of workflows, and preserve model efficiency as data adjustments. By integrating DevOps ideas, MLOps lets you streamline the effective lifecycle administration of ML fashions, from growth to maintenance. MLOps stage 2 represents a big level of automation, where deploying varied ML experiments to production environments requires minimal to no manual effort.

The Ml Pipeline Must Be Scalable

- Fundamentals of Machine Studying, Knowledge Engineering, Mannequin Improvement, Model Deployment, Containerization, Model Versioning, Monitoring ML models and A/B testing.

- AsMLOps is transforming the method in which organizations develop, deploy, and manage machine learning fashions.

- One of the primary benefits of MLOps is making certain that machine learning fashions remain dependable and performant over time.

- Managing these dependencies can be challenging, particularly in large-scale deployments with a number of fashions.

- By receiving timely alerts, knowledge scientists and engineers can quickly investigate and handle these concerns, minimizing their impact on the model’s performance and the end-users’ expertise.

Whether Or Not you’re operationalizing your first mannequin or managing hundreds throughout departments, these are the pillars of contemporary, sustainable MLOps. First and foremost, if our pipeline isn’t built in such a way that is conducive to each stage flowing onto the following, then there isn’t much we will do when it comes to orchestration. From the design stage, our pipelines ought to be created so that every stage can work together with the following with out a lot friction or additional interactions. If we are ready to create a template for the pipeline, we might help to reduce many unnecessary complications trying to get our system to work in the first place.

This consists of defining the specified outcomes, key performance indicators (KPIs), and the specific objectives that the machine learning fashions are anticipated to attain. Machine studying (ML) models are altering how organizations use knowledge extra effectively. They allow the automation of complex information evaluation duties and generate accurate predictions from giant datasets.

It goals to maneuver machine studying models from design to manufacturing with agility and minimal price, while additionally monitoring that models meet the anticipated objectives. Function management and pipeline administration instruments present two complementary approaches to enabling knowledge processing, improvement to manufacturing workflows, and collaboration. Function shops enable customers to track derived, aggregated, or expensive-to-compute features for development and production, along with their provenance.

At the second, cloud infrastructure exists side-by-side with on-premise techniques typically. Information scientists can spend their time doing more of what they were hired to do – ship high-impact models – while the cloud provider takes care of the remainder. Cloud computing corporations have invested hundreds of billions of dollars in infrastructure and administration.

MLOps goals to streamline the time and sources it takes to run information science fashions. Organizations acquire large quantities of knowledge, which holds priceless insights into their operations and their potential for improvement. Machine studying, a subset of synthetic intelligence (AI), empowers companies to leverage this data with algorithms that uncover hidden patterns that reveal insights. Nevertheless, as ML becomes increasingly built-in into on a daily basis operations, managing these fashions effectively turns into paramount to make sure steady enchancment and deeper insights. MLOps, then again, is a set of finest practices specifically designed for machine learning projects.

Machine Studying Operations, or MLOps, refers back to the rules, practices, culture, and tools that allow organizations to develop, deploy, and keep production machine learning and AI systems. Prepare, validate, tune and deploy generative AI, foundation fashions and machine learning capabilities with IBM watsonx.ai, a next-generation enterprise studio for AI builders. Monitoring the efficiency and health of ML fashions is important to guarantee that they proceed to satisfy the meant objectives after deployment. This course of entails regularly assessing for model drift, bias and other potential points that might compromise their effectiveness. By proactively identifying and addressing these considerations https://www.globalcloudteam.com/, organizations can keep optimal model efficiency, mitigate risks and adapt to changing situations or feedback.

MLOps is an evolving subject, and organizations should embrace continuous studying and enchancment. This consists of staying updated on the latest instruments, methods, and best practices, as well as constantly refining MLOps processes based mostly on suggestions and new insights. The maturity of MLOps practices used in business at present varies extensively, in accordance with Edwin Webster, a knowledge scientist who started the MLOps consulting follow for Neal Analytics and wrote an article defining MLOps. At some corporations, knowledge scientists nonetheless squirrel away models on their personal laptops, others turn to huge cloud-service suppliers for a soup-to-nuts service, he said.

We had been (and still are) learning the waterfall mannequin, iterative model, and agile fashions of software growth. “Other” points reported included the need for a very different skill set, lack of access to specialised compute and storage. The overwhelming majority of cloud stakeholders (96%) face challenges managing each on-prem and cloud infrastructure. It can’t leave their servers as a outcome of within the probability of a small vulnerability, the ripple effect can be catastrophic. As talked about above, one survey shows that 65% of an information ai implementation scientist’s time is spent on non-data science duties.